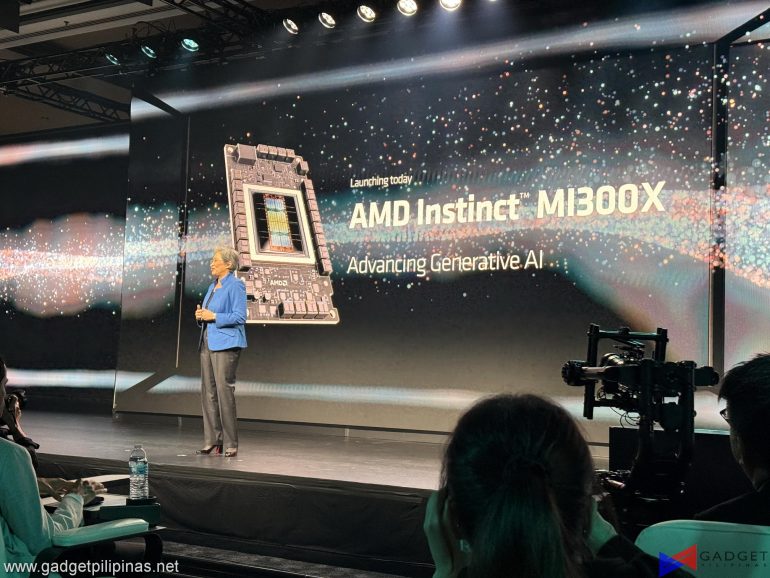

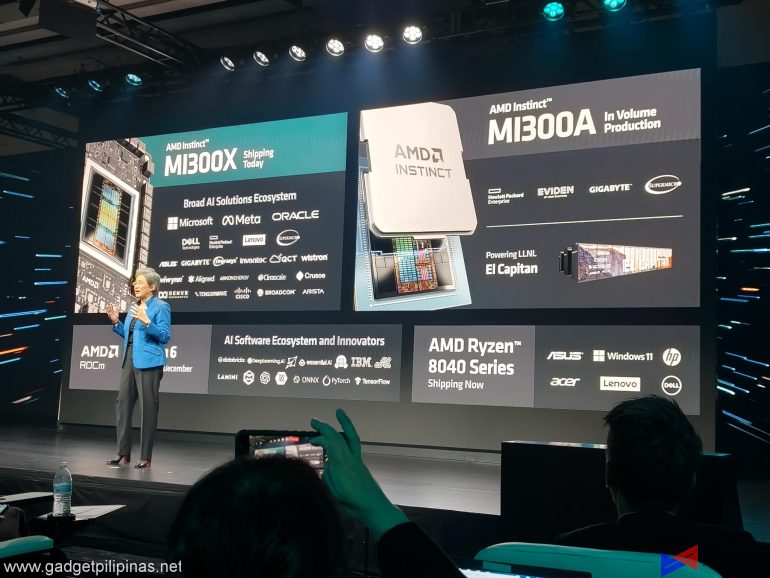

AMD has announced the release of AMD Instinct MI300X accelerators, featuring industry-leading memory bandwidth for generative AI and leading performance for large language model (LLM) training and inferencing. Additionally, the AMD Instinct MI300A accelerated processing unit (APU) combines the latest AMD CDNA 3 architecture and “Zen 4” CPUs, delivering groundbreaking performance for high-performance computing (HPC) and AI workloads.

“AMD Instinct MI300 Series accelerators are designed with our most advanced technologies, delivering leadership performance, and will be in large-scale cloud and enterprise deployments. By leveraging our leadership hardware, software, and open ecosystem approach, cloud providers, OEMs, and ODMs are bringing to market technologies that empower enterprises to adopt and deploy AI-powered solutions.”

-Victor Peng, AMD President

AMD Instinct MI300X Accelerator

AMD introduces the formidable Instinct MI300X accelerators, showcasing the pinnacle of innovation through the advanced AMD CDNA 3 architecture. Surpassing its forerunner, the MI300X boasts a remarkable 40% increase in compute units, a 1.5-fold surge in memory capacity, and an astonishing 1.7 times peak theoretical memory bandwidth. This powerhouse is meticulously crafted to meet the intricate demands of AI and high-performance computing (HPC) workloads, embracing new math formats such as FP8 and sparsity.

In response to the evolving landscape of large language models (LLMs), the MI300X accelerators step up with a best-in-class 192 GB of HBM3 memory capacity and an impressive 5.3 TB/s peak memory bandwidth. Tailored to deliver exceptional performance for the expanding realm of demanding AI workloads, these accelerators form the core of the AMD Instinct Platform. This pioneering generative AI platform, built on an industry-standard OCP framework, houses eight MI300X accelerators, setting the industry standard with a remarkable 1.5TB of HBM3 memory capacity. This standardized design empowers OEM partners to seamlessly integrate MI300X accelerators into existing AI offerings, streamlining deployment processes and accelerating widespread adoption. In direct comparison with the Nvidia H100 HGX, the AMD Instinct Platform emerges as a frontrunner, offering a 1.6 times throughput increase when running inference on LLMs like BLOOM 176B4. Its unique capability to execute inference for a 70B parameter model, exemplified by Llama2, on a single MI300X accelerator simplifies enterprise-class LLM deployments and stands as a testament to its exceptional Total Cost of Ownership (TCO).

AMD Instinct MI300A

AMD Instinct MI300A is a trailblazer, heralding the era of the world’s first data center Accelerated Processing Unit (APU) crafted explicitly for High-Performance Computing (HPC) and Artificial Intelligence (AI). Harnessing the power of 3D packaging and the innovative 4th Gen AMD Infinity Architecture, MI300A propels itself into a leadership position for critical workloads that reside at the intersection of HPC and AI. These APUs seamlessly integrate high-performance AMD CDNA 3 GPU cores, the latest AMD “Zen 4” x86-based CPU cores, and an impressive 128GB of next-generation HBM3 memory. The result is a performance-per-watt boost of approximately 1.9x on FP32 HPC and AI workloads compared to the previous-gen AMD Instinct MI250X.

In the realm where energy efficiency is paramount, MI300A APUs stand as a testament to AMD’s commitment to innovation. These APUs, addressing the resource-intensive nature of HPC and AI workloads, achieve efficiency by integrating CPU and GPU cores within a single package. This not only creates a highly efficient platform but also delivers the computing performance required for accelerating the training of cutting-edge AI models. AMD’s ambitious 30×25 goal underscores their dedication to setting the pace in energy efficiency, aiming for a 30-fold improvement in server processors and accelerators dedicated to AI training and HPC between 2020 and 2025. The APU advantage extends further, providing unified memory and cache resources, offering customers an easily programmable GPU platform, exceptional compute capabilities, rapid AI training, and impressive energy efficiency tailored for the most demanding HPC and AI workloads.

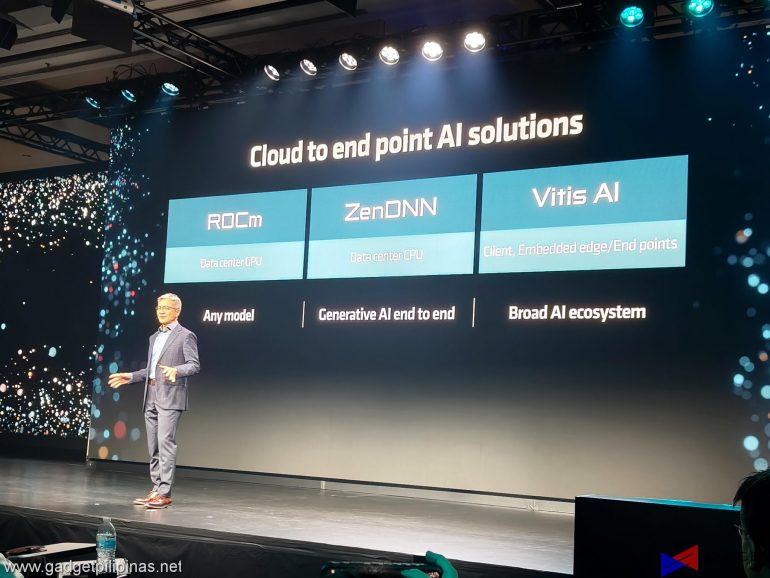

ROCm Software and Ecosystem Partners

AMD takes a bold step forward with the unveiling of the latest AMD ROCm™ 6 open software platform. This announcement is not merely a release; it’s a testament to AMD’s unwavering commitment to the open-source community and the advancement of AI software development. ROCm 6 marks a substantial evolution in AMD’s software tools, showcasing an impressive 8-fold increase in AI acceleration performance when running on MI300 Series accelerators during Llama 2 text generation, outshining its predecessors in both hardware and software capability.

ROCm 6 introduces a suite of new features tailored for generative AI, including the revolutionary FlashAttention, HIPGraph, and vLLM, among others. This positions AMD uniquely, allowing the company to harness the expansive landscape of open-source AI software models, algorithms, and frameworks. From the widely embraced Hugging Face to stalwarts like PyTorch and TensorFlow, AMD’s vision transcends mere software. It’s about driving innovation, streamlining the deployment of AMD AI solutions, and unlocking the true potential of generative AI. The synergistic integration with key industry players and strategic partnerships exemplifies AMD’s commitment, as seen through acquisitions like Nod.AI and Mipsology, and collaborations with entities like Lamini and MosaicML. These endeavors not only showcase AMD’s prowess in software capabilities but also pave the way for a future where AMD ROCm becomes synonymous with seamless, code-change-free LLM training on AMD Instinct accelerators.

AMD Instinct Product Specs

| AMD Instinct | Architecture | GPU CUs | CPU Cores | Memory | Memory Bandwidth (Peak theoretical) | Process Node | 3D Packaging w/ 4th Gen AMD Infinity Architecture |

| MI300A | AMD CDNA 3 | 228 | 24 “Zen 4” | 128GB HBM3 | 5.3 TB/s | 5nm / 6nm | Yes |

| MI300X | AMD CDNA 3 | 304 | N/A | 192GB HBM3 | 5.3 TB/s | 5nm / 6nm | Yes |

| Platform | AMD CDNA 3 | 2,432 | N/A | 1.5 TB HMB3 | 5.3 TB/s per OAM | 5nm / 6nm | Yes |

Grant is a Financial Management graduate from UST. His passion for gadgets and tech crossed him over in the industry where he could apply his knowledge as an enthusiast and in-depth analytic skills as a Finance Major. His passion allows him to earn at the same time help Gadget Pilipinas' readers in making smart, value-based decisions and purchases with his reviews and guides.