AI Accelerators matter in this new age of computing

The field of artificial intelligence (AI) is rapidly evolving. With new applications and advancements emerging at an unprecedented pace, the growth of AI and its role in the new age of computing are becoming more relevant than ever before.

This growth has led to an increased demand for computing power that can handle the complex calculations and data processing required for AI workloads. Traditional CPUs and GPUs are not always well-suited for these tasks, as they can be inefficient and power-hungry.

To address these challenges, AI accelerators have emerged as a specialized type of hardware designed to accelerate AI and machine learning (ML) applications. AI accelerators are tailored to the specific needs of AI workloads, offering significant improvements in performance, power efficiency, and cost-effectiveness.

What are AI Accelerators?

For the uninitiated, AI accelerators are specialized hardware components that are designed to accelerate AI and ML applications. They are typically integrated into servers, workstations, or edge devices to provide a performance boost for AI tasks. AI accelerators can be based on various architectures, including GPUs, FPGAs, and custom-designed ASICs.

How do AI Accelerators Work?

AI accelerators employ a variety of techniques to accelerate AI workloads. These techniques include:

- Optimized memory use: AI accelerators can use specialized memory architectures that are optimized for the data access patterns of AI algorithms.

- Lower precision arithmetic: AI accelerators can use lower precision arithmetic, such as 8-bit or 16-bit floating-point formats, to reduce the amount of computation required while maintaining acceptable accuracy.

- Parallel processing: AI accelerators can use parallel processing architectures to execute multiple instructions simultaneously, further improving performance.

Benefits of AI Accelerators

AI accelerators offer several benefits over traditional CPUs and GPUs for AI workloads. These benefits include:

- Higher performance: AI accelerators can provide significantly higher performance for AI workloads, often achieving orders of magnitude improvement over CPUs and GPUs.

- Improved power efficiency: AI accelerators are designed to be power efficient, consuming less power than CPUs and GPUs for equivalent performance.

- Lower cost: AI accelerators can be more cost-effective for AI workloads, especially for high-performance applications.

AMD AI Accelerator Products

AMD is a leading provider of AI accelerators, offering a range of products that cater to various needs and performance requirements. AMD’s AI accelerator products include:

- AMD Instinct MI250X AI Accelerator: The AMD Instinct MI250X is a high-performance AI accelerator that is based on the AMD XDNA architecture. It is designed for demanding AI workloads, such as natural language processing and computer vision.

- AMD Instinct MI200 AI Accelerator: The AMD Instinct MI200 is a mid-range AI accelerator that is also based on the AMD XDNA architecture. It is a cost-effective solution for a wide range of AI workloads.

- AMD Radeon Instinct Accelerator Cards: AMD Radeon Instinct accelerator cards are designed for workstations and edge devices. They provide a balance of performance, power efficiency, and cost-effectiveness for AI workloads.

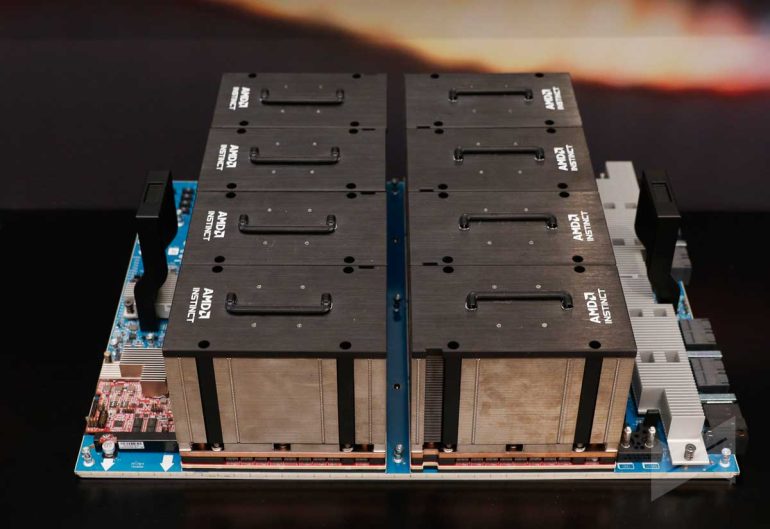

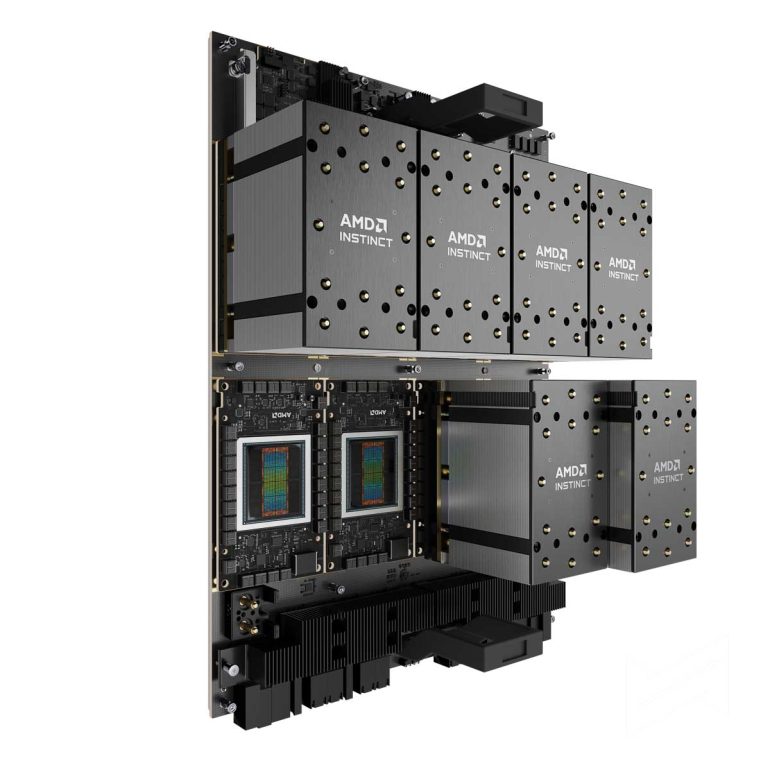

New AMD MI300A and AMD MI300X AI Accelerators

AMD announced the next generation of AMD Instinct Accelerators (and Ryzen AI for PCs) today, December 7, 4AM (Philippine Time). You can read our separate article on this link.

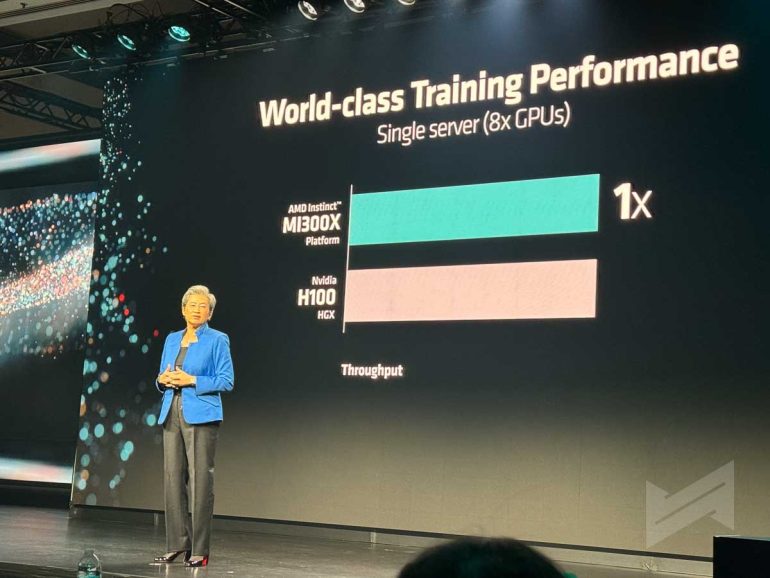

The AMD Instinct MI300X series is specifically engineered to offer exceptional memory bandwidth, making it an excellent choice for generative AI applications. Moreover, it boasts outstanding performance for both the training and inferencing of large language models (LLM).

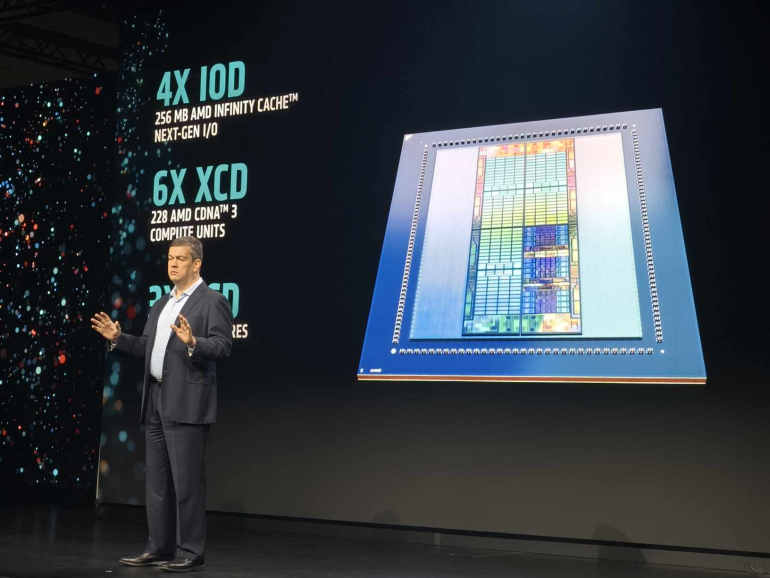

In addition, AMD has introduced the AMD Instinct MI300A accelerated processing unit, which combines the cutting-edge AMD CDNA 3 architecture with the revolutionary Zen 4 CPUs. This remarkable fusion delivers unparalleled breakthrough performance for high-performance computing (HPC) and AI workloads.

AMD Instinct MI300A Specs

- Architecture: AMD CDNA 3

- GPU CU: 228

- CPU Cores: 24 (Zen4)

- Memory 128GB HBM3

- Memory Bandwidth (Peak theoretical): 5.3 TB/s

- Process Node: 5nm/6nm

- 3D Packaging w/ 4th Gen AMD Infinity Architecture

AMD Instinct MI300X Specs

- Architecture: AMD CDNA 3

- GPU CU: 304

- CPU Cores: 24 N/A

- Memory 192GB HBM3

- Memory Bandwidth (Peak theoretical): 5.3 TB/s

- Process Node: 5nm/6nm

- 3D Packaging w/ 4th Gen AMD Infinity Architecture

The Role of AI Accelerators in the Evolving Landscape of Computing

AI accelerators are playing an increasingly important role in the evolving landscape of computing. As AI becomes more pervasive, AI accelerators will become essential for powering a wide range of applications, from data centers to edge devices.

Here are some of the key trends that are driving the adoption of AI accelerators:

- The growth of AI applications: The number of AI applications is growing rapidly, and these applications are becoming increasingly demanding.

- The need for real-time AI: Many AI applications require real-time performance, which traditional CPUs and GPUs cannot always provide.

- The increasing importance of edge computing: Edge computing is bringing AI processing closer to the source of the data, and AI accelerators are essential for enabling edge AI applications.

AI Accelerators are essential for powering the future of computing

AI accelerators are a transformative technology that is driving the evolution of computing. These specialized hardware components are enabling AI to reach new heights of performance and efficiency, and they are playing a critical role in the development of next-generation AI applications. As AI continues to grow in importance, AI accelerators will become even more essential for powering the future of computing.

Giancarlo Viterbo is a Filipino Technology Journalist, blogger and Editor of gadgetpilipinas.net, He is also a Geek, Dad and a Husband. He knows a lot about washing the dishes, doing some errands and following instructions from his boss on his day job. Follow him on twitter: @gianviterbo and @gadgetpilipinas.